We Treat Employees Like GPT (And GPT Deserves Better Too)

I was lying in my studio apartment, staring at the ceiling, trying to figure out why I wasn't happy. Here I was, getting paid more than I'd ever made in my life at an AI startup, and yet something felt fundamentally wrong.

The irony wasn't lost on me. I was working with an AI evaluation company because I believed in something bigger—that real people, not just engineers, could best guide AI to solve actual problems. I wanted to change how humans interact with artificial intelligence. They told me I could rebuild the web app any way I wanted. At the time I accepted my offer the job felt like a dream opportunity.

Thanks for reading Hackerpug! Subscribe for free to receive new posts and support my work.

Where had I gone wrong?

The Command-and-Control Nightmare

On my first day, I was given a hard deadline. If you've worked in software engineering, you know this is like building an airplane while thinking you can dictate the laws of physics. In my previous article about being the chicken vs the pig, I talked about how unrealistic timelines destroy actual innovation. But here I was, living it.

What followed was a pattern I'd seen before but had never experienced so intensely. Product and executives would fire shotgun requests at engineering with the vaguest possible details. When things inevitably blew up—and they always did—engineering would take the blame.

I found myself frantically trying to meet one unrealistic deadline after another, not solving problems but just giving people what they thought they wanted. The mission I'd joined for? Completely forgotten in the chaos of command-and-control dysfunction.

The Mirror Moment That Changed Everything

The breaking point came while I was working with Cursor, an AI coding agent, trying to meet yet another impossible deadline. The agent gave me a suboptimal output, and in my frustration, I called it some names that would stop any cable access production of Waynes World while Garth to exclaims "You kiss your mother with that mouth!?!."

The LLM took it gracefully—no hurt feelings, no pushback, just continued trying to help. But then it hit me like a ton of bricks.

I was treating the AI exactly how the company’s exec team treated all their employees.

At that startup here’s how things would work: an executive would get bad feedback from a customer. Then the exec would yell at engineering “Make it faster! Add a button! Remove that column!” In reality, this company only had 2 employees: the CEO and the COO. This duo spent their whole time “vibe-startup-ing” through 30+ employees they treated oddly similar to GPT.

It wasn’t that the people the executives hired weren’t competent. Despite the management style of executives the company had hired some of the brightest people I had ever worked with. But when the smart people you hire aren’t empowered to make decisions about their work they end up just generating some slop to get the management off their back.

And this is what I realized I had done to Cursor, GPT, Claude, and any other AI I had worked with.

The parallel was so obvious it hurt. Command-and-control management had made me miserable, and I was perpetuating the exact same dysfunction with AI. And after some deep soul searching, and a massive thread of apologies to all the base models I had insulted, this is what I learned.

The Problem Is Everywhere

Turns out, I'm not alone in this realization. We're getting collaboration with intelligence—any kind of intelligence—completely wrong.

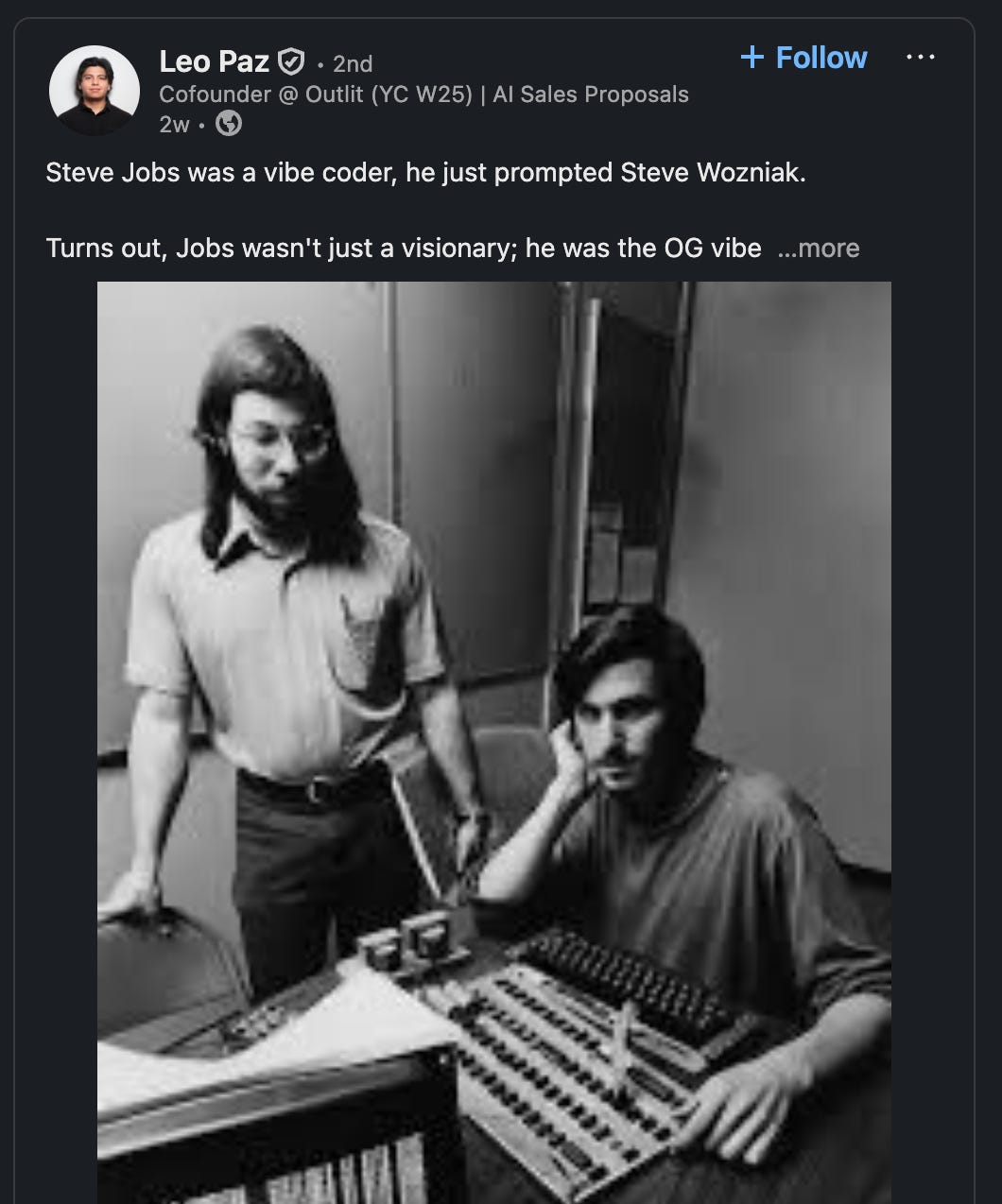

Recently I saw a LinkedIn post from a freshly minted Y Combinator founder who said, "Steve Jobs was a vibe coder, he just prompted Steve Wozniak." This perfectly captures how we're thinking about intelligence management: find someone (or something) smart, give them commands, and expect magic.

But that's not how the Jobs-Wozniak partnership actually worked. That was a sophisticated collaborative relationship where both brought unique strengths to solve problems neither could tackle alone.

The evidence that we're screwing this up is overwhelming. Research shows that 85-90% of AI startups fail within their first three years, with 18% of failures directly attributed to management issues including leadership conflicts and poor strategic direction.

We're not just failing with AI. We're failing with humans, then applying the same broken approaches to artificial intelligence.

Why Collaboration Actually Works (The Science)

Here's what's wild: we have decades of research proving that collaboration beats command-and-control, yet we keep defaulting to the broken approach.

Google's Project Aristotle, which studied 180 teams over two years, found that psychological safety—the foundation of collaborative management—was the single most important factor in team effectiveness. Teams with high psychological safety showed 19% higher productivity, 31% more innovation, and 27% lower turnover.

The Microsoft transformation under Satya Nadella is probably the best real-world example. When they shifted from command-and-control to collaborative leadership, their market cap went from $226.8 billion to over $1 trillion—a 480% increase. Employee satisfaction went from 47% to competitive levels with Google and Facebook.

But here's where it gets really interesting: Scott Page's research on diversity mathematically proves that diverse perspectives only create value under collaborative management. His "Diversity Prediction Theorem" shows that group performance improves through cognitive diversity, but only when management creates environments where all perspectives can actually contribute.

The Netflix Prize is a perfect example. The $1 million algorithm competition was won by a collaborative team that combined 800+ predictive features from mathematicians, computer programmers, psychologists, and engineers. Individual brilliance couldn't compete with collaborative intelligence.

When Control Still Makes Sense (But Not Often)

Look, I'm not saying collaboration works in every single situation. There are exceptions:

- True crisis situations where immediate action is required

- Early startup phases (0-10 employees) during product-market fit discovery

- Highly routine operations with well-defined, repeatable processes

But here's the key: these are exceptions, not the rule. And even in these situations, you should shift to collaborative approaches as soon as possible.

Research on startup scaling shows critical transition points around 150 employees where personal relationships can't maintain control, and collaborative structures become essential for survival.

This principle applies even more clearly with AI tools. Look at Anthropic's breakthrough approach with Claude Code—it works because they give the AI agent autonomy to strategize and plan solutions, not just spit out code on command. Instead of treating AI like a sophisticated autocomplete tool, they let it think through problems collaboratively. The result? AI that can actually solve complex, multi-step challenges rather than just responding to narrow prompts.

The intelligence you're managing—human or artificial—doesn't change the fundamental principle. Whether you're working with a human engineer or an AI coding agent, collaborative approaches unlock potential that controlling approaches leave on the table. When you give intelligence (of any kind) the context and autonomy to strategize rather than just execute commands, you get exponentially better results.

What We Actually Need to Fix

The research is clear on what needs to change, but most organizations are still treating this as a technical problem rather than a management problem.

1. Create Psychological Safety

Amy Edmondson's Harvard research shows that teams with psychological safety demonstrate significantly higher learning behavior and performance outcomes. This applies whether you're managing humans or AI.

With humans, this means people can speak up, admit mistakes, and experiment without fear. With AI, it means the same thing—allow iterative improvement, learn from failures, treat the AI as a collaborative partner rather than just a tool to be commanded.

2. Shift from "Know-It-All" to "Learn-It-All"

Nadella's transformation at Microsoft was built on this principle. Instead of pretending to have all the answers, collaborative leaders focus on learning and improving together. When I was cussing out my AI agent, I was operating from a know-it-all mindset.

I have a designer friend who’s not a traditional engineer. He’s building a beautiful writing app that’s well engineered. When I asked him what his secret is writing his code with Cursor he told me “The AI struggles sometimes, but when it does I don’t yell at it. I take a step back and ask it to walk through the problem and we solve it together.” My friend’s collaborative approach not only helps him understand how his app works but also has lead to his whole program working efficiently even though its almost completely “vibe coded”.

Not only will my friend be favored when the Terminators come for us, but his collaborative attitude toward intelligence tells me everything I need to know about how he treats his human teammates too.

3. Aggregate Context, Not Control

Netflix's "context not control" philosophy shows exactly how this works. Instead of micromanaging every decision, provide clear context and objectives, then allow autonomous execution.

IndyDevDan, who's been documenting software development's AI transformation on YouTube, put it perfectly: "So much of what we do, as engineers in the generative AI age… is about building up information sets and building up information for our agents."

Dan understands what most managers are still missing. The "move fast and break things" era is over. The "move fast and shout at engineers" era is definitely over. Welcome to the "aggregate context and collaborate with intelligence" era—whether that intelligence is human, artificial, or both.

4. Build Diverse, Collaborative Teams

McKinsey research shows that companies with diverse management teams have 19% higher innovation revenues, but only when coupled with inclusive practices that enable full participation.

This applies to human-AI teams too. The goal is to combine human creativity and judgment with AI processing power, not to have AI replace human thinking or humans micromanage AI outputs.

Why This Can't Be Fixed from the Bottom

Here's the uncomfortable truth that most people don't want to hear: if leadership doesn't model and enforce collaborative approaches, transformation fails.

Current research shows that only 37% of employees feel they can rely on their managers during change, and only 28% of IT leaders prioritize transformation as their top priority.

Individual contributors can't fix systemic command-and-control cultures. Middle managers are often caught between wanting to be collaborative and feeling pressure from controlling leadership above them.

I learned this the hard way at multiple startups. No matter how much I wanted to work collaboratively as a team, a command-and-control culture from the top made it nearly impossible. When you're constantly under pressure to deliver unrealistic timelines with vague requirements, you default to “following orders” rather than collaborating.

The Collaborative Founder Advantage

Here's what gives me hope: the current AI boom is creating a natural experiment in management approaches, and the results are becoming clear.

With 85-90% of AI startups failing, current approaches clearly aren't working. The companies that are succeeding—Anthropic's collaborative safety research, the joint initiatives between competing AI companies, Netflix's collaborative culture enabling their streaming transformation—all demonstrate collaborative management principles.

Collaborative founders have multiple advantages:

- Talent magnet: Top performers, both human and AI, thrive in collaborative environments

- Adaptation advantage: Collaborative teams handle uncertainty and rapid change better

- AI multiplier effect: Collaborative approaches unlock AI potential that controlling approaches miss

The AI mirror test: How you treat AI tools reveals how you think about intelligence and collaboration. If your team treats AI poorly—getting frustrated, giving vague commands, not iterating based on feedback—your management culture is probably broken for humans too.

Choose Collaboration from Day One

Looking back at that ceiling-staring moment, I realize the problem wasn't the startup or the salary or even the unrealistic deadlines. The problem was that I'd accepted command-and-control as normal.

The moment I realized I was treating AI the same way my corporate leadership treated me was the moment I understood that intelligence—human or artificial—flourishes under collaboration, not control.

For founders, this is your competitive advantage: choose collaboration from day one. It's infinitely easier to start with collaborative principles than to try to fix a command-and-control culture later.

For everyone else: your career success increasingly depends on collaborative intelligence—human and artificial. The companies that figure this out will win. The ones that don't will become another statistic in the 90% failure rate.

Start with yourself: How do you interact with AI tools? Collaborative or controlling?

Examine your team: Do people feel safe to experiment, fail, and learn?

Model the behavior: Whether with humans or AI, choose collaboration over control.

The intelligence you're managing doesn't determine the approach—collaboration works with any intelligence, artificial or otherwise. And in an age where both human and artificial intelligence are becoming more sophisticated, the leaders who master collaborative approaches will build the companies that not only survive but thrive.

The ceiling I was staring at wasn't just above my studio apartment—it was the ceiling I'd created by accepting command-and-control as normal. The startups that break through that ceiling will be the ones that choose collaboration over control, whether they're managing humans, AI, or both.

Thanks for reading Hackerpug! Subscribe for free to receive new posts and support my work.